Published - Sat, 30 Apr 2022

What does it takes to excel in the profession of Data Science

Today’s data science professionals are in high demand across a number of fields ranging from business operations and financial services to healthcare, science and more. A data scientist is an expert who uses data to extract valuable business insights. These professionals should have extensive knowledge related to computer science, data visualization and data mining along with statistics and machine learning. However, it all starts with learning the fundamentals.

What You Should Learn:

1) Coding

A data scientist should learn to code and create programs. They should have a solid understanding of basic coding languages, advanced analytical platforms and front end web visualization. For example:

Python

Python is becoming an increasingly popular programming language. The platform is useful for a variety of processes performed by data scientists. Python’s versatility enables users to accomplish a variety of tasks that might include creating data sets or importing SQL tables. The platform is also recognized as being easy to pick up, making it a good choice for new data professionals because it helps at every stage of your career. New data analysts can learn Python quickly, but Python still has exceptional value for experienced professionals. While programmers rely on Python in many established fields, data analysts can also use Python for emerging processes.

Python can even help to prepare you to learn other skills and languages down the line. Taken together, those factors make Python a great choice if you’re looking to learn a programming language. The Python platform is also open-source, making it free to install, so you don’t have to pay anything to start honing your Python skills. It is also known for its strong online community. The Python online community provides support, education, interaction and projects. There is also a great deal of potential for Python to become a common language for producing web-based analytics products and data science.

SQL

SQL is often a required skill for data scientists and is used to accomplish various functions that include adding, deleting or extracting information from databases. SQL also has the capability to perform analytical tasks. By using the platform’s precise commands, users are able to perform inquiries more quickly. Because of the prevalence of databases in today’s world, data professionals should at least have a basic familiarity with SQL. There is a growing demand for those who are experts in databases, so you can even specialize in SQL developing. Whether you want to turn SQL into a career or just want to supplement your programming knowledge, you’ll appreciate a quick introduction to the highlights of SQL.

Before getting started with SQL, you should understand what exactly it is. As mentioned, it’s a language that lets us connect with databases. This is key since data is a crucial component of mobile and web applications, from profile information to those we follow on social media to cookies. Applications and websites use databases to hold the data. Professionals use SQL to interact with that data via a programming language.

JavaScript

JavaScript is considered by many to be the scripting language of the web. This is a programming language or scripting that makes it possible to implement complex actions on a web page. JavaScript is part of nearly everything a website does that is more advanced than showing static information. JavaScript is incredibly versatile and can be used for both server-side and client-side language. Although not always the case, many Data Analytics boot camps include JavaScript in their curriculum.

JavaScript builds on the web technologies of CSS and HTML, which are the other standards. As a quick reminder, HTML is a markup language that defines paragraphs and provides structure. CSS incorporates style rules, applying them to HTML. JavaScript allows for many other things, such as animating images and controlling multimedia.

HTML

HTML stands for HyperText Markup Language and is the code that structures content on a website. It is important to note that HTML is not a type of coding language. Instead, it is a markup language. As a markup language, HTML outlines the structure for content. HTML includes various elements that you use to wrap or enclose portions of the content, with the goal of having it act or appear the way you want. The tags can do things such as add hyperlinks, change font size and italicize words, among others.

Having the knowledge to build your own website with HTML gives you the chance to stand out from the crowd with an authentic, hand-crafted representation of your business — or any business for that matter. This powerful coding language is not only helpful to web developers, and you just might find yourself in a position where you may need them in a professional data setting.

2) Data Visualization

The amount of data that businesses and industries produce today is greater than ever before. However, in order to be useful, the data must be converted into a format that is easily comprehended. A data scientist uses D3.js, ggplot, Matplotlib, Tableau and other tools for this purpose. By organizing and transforming data into usable formats, companies are able to make informed decisions based on the results.

3) Working With Unstructured Data

Unstructured data refers to audio or visual feeds, blog posts, customer reviews and social media posts. The data included within multimedia formats often requires an ability to identify, analyze and manipulate the data in order to obtain critical information that may be beneficial to a company or industry.

4) Artificial Intelligence and Machine Learning

Data scientists who can create programs with artificial intelligence may find a benefit from advancing the program’s ability to learn independently. The program can use decision trees, logistic regression and other algorithms to analyze data sets, make predictions or solve problems once the platform receives a sufficient amount of data.

Machine learning is a powerful tool. When you teach a machine how to use an algorithm to identify patterns, it can use those patterns to predict outcomes without using any preconceived notions or pre-programmed rules. A machine can only improve its own learning by using the information it has been given, so machine learning isn’t successful unless users provide a diverse and large enough range of data.

5) Mathematics

Calculus, linear algebra and statistics are areas of math that data scientists should know in order to create their own data analysis platforms. A background in statistics is particularly helpful for understanding statistical distributions, estimators and tests. The results of statistical findings are commonly required by companies in order to make informed decisions.

What You Should Already Have:

1) Natural Curiosity

A data scientist needs to possess an innate desire to obtain more knowledge or information. This drive motivates them to begin the educational process and learn the field of data science in order to find answers and insights contained within data sets. Curiosity drives the best scientists forward despite obstacles to achieve the end result.

2) Effective Communication

The diagnoses, predictions or other findings that data scientists are able to formulate mean nothing to a company if they cannot comprehend the results. While presenting illustrated data, a data scientist must be able to explain how the results impact the business. As such, data scientists must be able to clearly translate their findings in order to make them useful to a company.

3) Commitment to Learning

The most successful data professionals will have a strong understanding of the core technical data analyst skills needed to succeed in the field. For example, it is important to develop a solid grasp in today’s most in-demand data languages such as SQL, NoSQL, Postgres/pgAdmin and MongoDB. It is also beneficial to learn advanced specialties like statistical modeling, forecasting and prediction, pivot tables and VBA scripting.

Having a solid understanding of today’s critical programming languages can help a data professional stand out in the job field. Those new to the field should gain a thorough understanding of core data analytics tools like NumPy, Pandas and Matplotlib. You might also consider learning specific libraries for interacting with web data such as Requests and BeautifulSoup.

Finally, it is helpful for data professionals to learn the inner workings of web visualization. Building visualizations is of little benefit without an effective way to communicate the message. Consider exploring the core technologies of front-end web visualization such as Bootstrap, Dashboarding and Geomapping in addition to the coding specialties above. Learning to use these tools will help any analysts create new, interactive data visualizations that can be shared with everyone on the web.

4) Short and Long Term Goals

Setting long-term goals is just the first step toward your employment success; you should also create a strategy for achieving smaller, short-term goals. These will help keep you motivated and moving forward toward a career in Data Science. For example, if your long-term plan is to work in the industry within one year, you’ll need a tailored path to get there.

Updating your resume and portfolio, networking with industry professionals and taking extra courses are just a few of the short-term goals you may want to consider adding to your action plan.

5) Ability to Adapt

As a data analyst, it is essential to identify the skills gap you have based on future goals. For example, the skills required for data professionals in marketing may be quite different from that of a data scientist in the financial services industry. A vital component of long-term success as a data professional is the ability to adapt your skills and knowledge to evolving business needs.

6) Collaboration Skills

Data scientists do not work alone. They must combine their efforts with business and industry executives to seek out effective strategies. They may have to work with engineers or designers to manufacture better products or with marketing firms to create more effective campaigns. Scientists may share their insights with software engineers or key company stakeholders, and in both cases will need to tailor their communication strategies to do so effectively.

Created by

Comments (0)

Search

Popular categories

Latest blogs

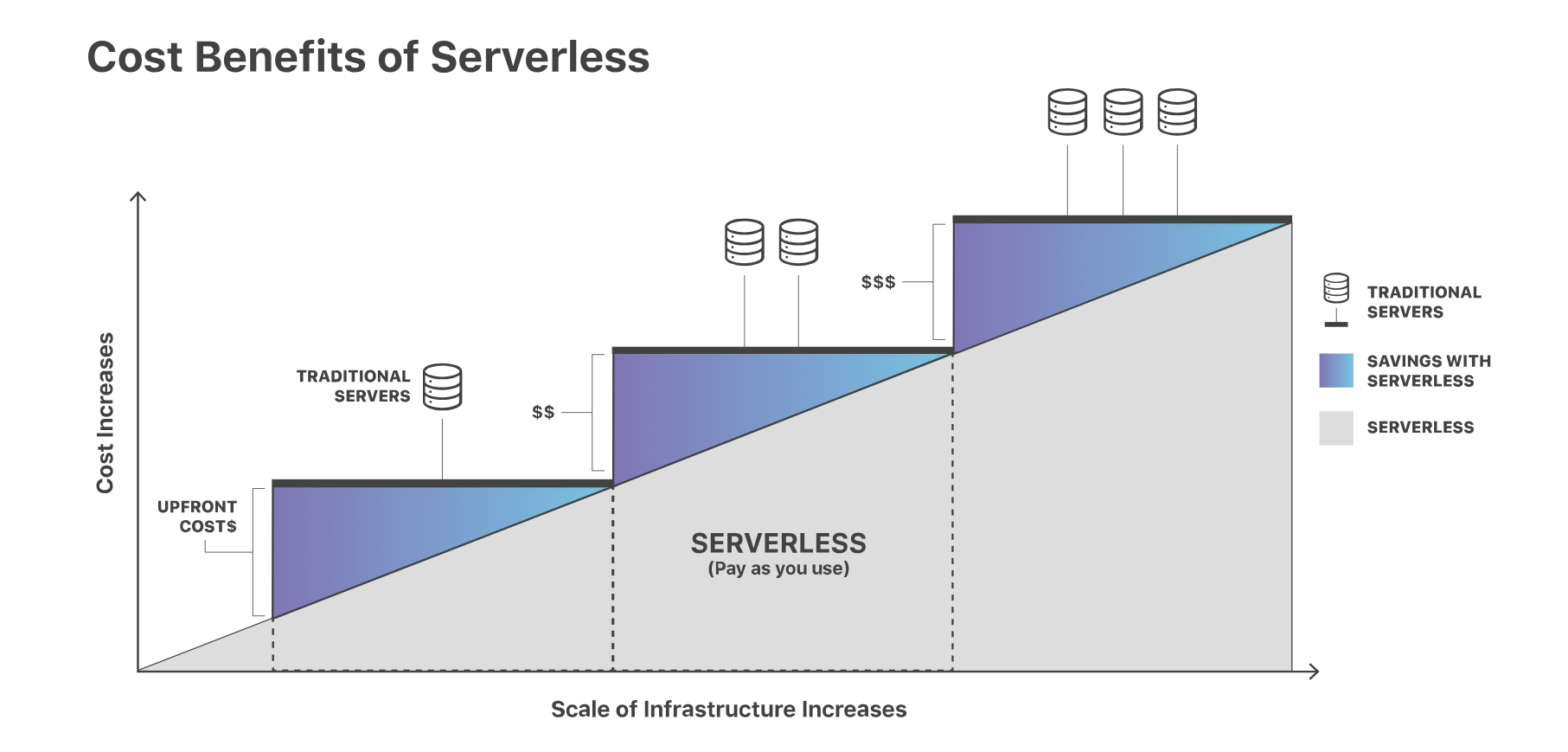

What is serverless computing?

Tue, 23 Jul 2024

Monolithic vs. Micro-services: An Overview

Tue, 21 Mar 2023

ChatGPT: The Future of Chatbots

Tue, 21 Mar 2023

Write a public review